If you want to use CogVLM2 Hugging Face Spaces in 2025, this step‑by‑step guide will teach you everything from setup to deployment.

Thank you for reading this post, don't forget to subscribe!With years of experience in AI development, NLP models, and Hugging Face integrations, I’ll break it down clearly so you don’t waste time hunting for scattered resources.

To deploy CogVLM2 on Hugging Face Spaces, you need to

(1) set up a Hugging Face account,

(2) create a Space,

(3) choose the right SDK like Gradio or Streamlit,

(4) upload CogVLM2 model files or load from huggingface.co, and

(5) configure dependencies for smooth deployment.

Once done, your model can be shared publicly or hosted as Hugging Face Private Spaces.

This guide provides a complete breakdown: installation, configuration, performance tuning, troubleshooting, and FAQs.

What is CogVLM2?

CogVLM2 is a vision-language foundation model designed for multimodal tasks. It can handle both text and image inputs together, making it powerful for real-world applications like document understanding, image captioning, question answering, and AI assistants.

Key features of CogVLM2:

- Supports multimodal learning (images + text).

- Optimized for faster inference compared to earlier multimodal models.

- Works well with Hugging Face’s Spaces platform for deployment.

- Useful for developers looking to build scalable AI apps in 2025.

What are Hugging Face Spaces?

Spaces on Hugging Face are a way to host, share, and run machine learning apps directly in the browser. You don’t need separate hosting — it’s all serverless and runs on Hugging Face infrastructure.

- huggingface.co spaces are public apps you can explore and fork.

- spaces huggingface can be built with Gradio or Streamlit.

- You can even create huggingface private spaces to limit access to specific users or teams.

Why use Spaces for CogVLM2?

Because you can quickly deploy CogVLM2 as an app and let other people interact with it live, without any complex backend work.

Step-by-Step: Deploy CogVLM2 on Hugging Face Spaces

Here’s a complete setup and deployment tutorial.

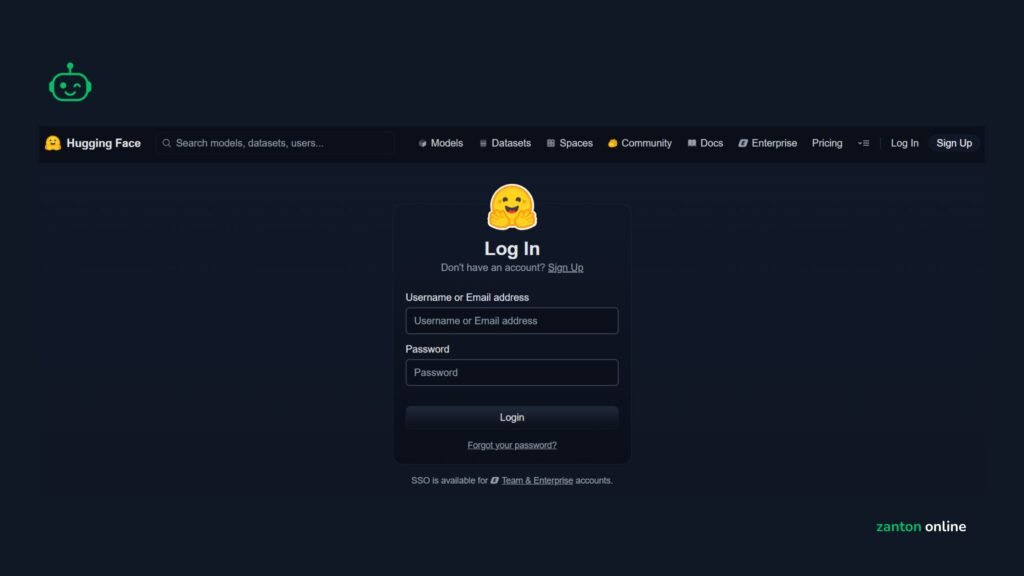

Step 1: Create a Hugging Face Account

- Go to huggingface.co.

- Sign up with GitHub, Google, or email.

- Once logged in, create an Access Token (required for private spaces).

Step 2: Create a New Space

- On your profile, click New Space.

- Choose type: Public or Private.

- Name your project (for example:

cogvlm2-app).

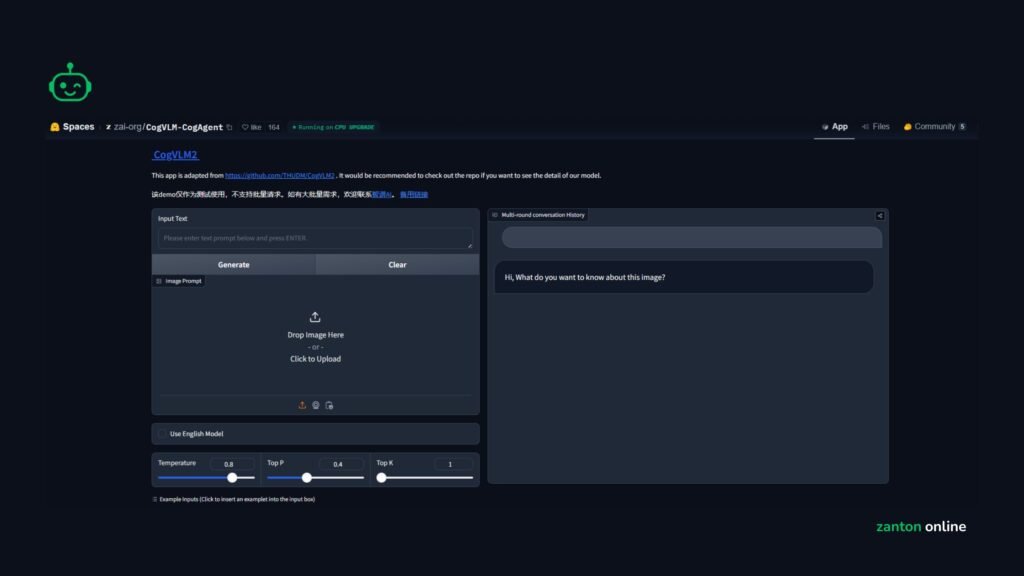

Step 3: Select SDK

Hugging Face Spaces supports:

- Gradio (most popular for CogVLM2 apps).

- Streamlit (for Python dashboards).

- Static HTML/JS (not ideal for CogVLM2).

For text and image inputs, Gradio is perfect.

Step 4: Add CogVLM2 Model

Two main ways:

- Pull directly from Hugging Face Hub (recommended): python

from transformers import AutoTokenizer, AutoModelForCausalLM tokenizer = AutoTokenizer.from_pretrained("THUDM/cogvlm2") model = AutoModelForCausalLM.from_pretrained("THUDM/cogvlm2") - Upload your own files into the Space repository.

Step 5: Setup requirements.txt

Inside your Space repo:

texttransformers

torch

gradio

Step 6: Create app.py

Example Gradio app:

pythonimport gradio as gr

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("THUDM/cogvlm2")

model = AutoModelForCausalLM.from_pretrained("THUDM/cogvlm2")

def predict(text):

inputs = tokenizer(text, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=100)

return tokenizer.decode(outputs[0])

iface = gr.Interface(fn=predict, inputs="text", outputs="text")

iface.launch()

Step 7: Commit and Build

- Push your files (

app.py,requirements.txt). - Hugging Face will auto-build your Space.

- Done — your CogVLM2 app is live!

Use Cases of CogVLM2 in Hugging Face Spaces

- Document AI with Hugging Face LayoutLMv2 + CogVLM2 for reading PDFs.

- Multimodal Chatbots that can “see” images and “talk” in text.

- E-commerce image-based product search.

- Education apps to explain graphs, diagrams, and handwritten notes.

Benefits of Using Hugging Face Spaces for CogVLM2

- Zero server setup (runs on Hugging Face cloud).

- Collaboration-ready (public Spaces let users test apps instantly).

- Scalable deployment with GPU options.

- Private option with Hugging Face Private Spaces.

Troubleshooting CogVLM2 Deployment

Issue 1: Out of memory error

- Solution: Enable GPU in Space settings.

Issue 2: Slow loading

- Solution: Use optimized model weights (quantized version).

Issue 3: Dependency errors

- Solution: Check

requirements.txtformatting.

Issue 4: Space not rebuilding

- Solution: Manually restart build in Settings → Restart Space.

CogVLM2 vs Hugging Face LayoutLMv2

| Feature | CogVLM2 | Hugging Face LayoutLMv2 |

|---|---|---|

| Input type | Text + Images (multimodal) | Documents (text + layout + OCR) |

| Best for | Vision-Language tasks | Document AI |

| Deployment on Spaces | Easily supported with Gradio | Supported with Gradio |

| Use case examples | Chatbots, captioning | Invoice parsing, OCR tasks |

Frequently Asked Questions (FAQs)

1. What is CogVLM2?

CogVLM2 is a multimodal AI model that processes both images and text, making it ideal for interactive applications like chatbots and document readers.

2. How do I deploy CogVLM2 Hugging Face Spaces?

You create a Space on Hugging Face, add Gradio or Streamlit, load the CogVLM2 model, and host it directly.

3. Can I use CogVLM2 in Hugging Face Private Spaces?

Yes, you can enable private mode for internal or restricted projects.

4. Do I need GPU for CogVLM2 on Spaces?

For small tests, CPU works, but for production apps GPU is recommended.

5. What is the difference between CogVLM2 and Hugging Face LayoutLMv2?

CogVLM2 handles general vision-language tasks, while LayoutLMv2 is focused on document AI and structured data extraction.

6. Can I integrate CogVLM2 with Gradio?

Yes, Gradio is the simplest SDK for building a text+image web interface quickly.

7. How do I reduce Space build failures?

Check dependencies, restart builds, and use base images recommended in documentation.

8. Is CogVLM2 free on Hugging Face Spaces?

Yes, but free tier comes with limited hardware. For faster performance, you may need a paid plan.

9. Can I fork other CogVLM2 Spaces?

Yes, you can explore Hugging Face Spaces Hub and fork existing CogVLM2 deployments.

10. Does CogVLM2 support API access in Spaces?

Yes, once your app is live, an API endpoint is automatically created for programmatic use.

Conclusion

Deploying CogVLM2 Hugging Face Spaces in 2025 is one of the fastest ways to build and share an AI app. By combining Hugging Face’s Spaces hosting platform with the power of CogVLM2’s multimodal features, developers get both simplicity and scalability.

Whether you run it in public spaces huggingface for collaboration or in huggingface private spaces for enterprise needs, the process is almost the same: set up an account, create a Space, load CogVLM2, and go live.

If you are experimenting with hugging face layoutlmv2 or other Hugging Face models, you can easily integrate them with CogVLM2 in the same Space for more advanced use cases.

This guide should give you everything you need to set up, run, troubleshoot, and scale your CogVLM2 applications in Hugging Face Spaces.